The production values are high and the message is compelling. In an 11-minute mini-documentary, Facebook acknowledges its mistakes and pledges to “fight against misinformation”.

“With connecting people, particularly at our scale, comes an immense amount of responsibility,” an unidentified Facebook executive in the film solemnly tells a nodding audience of new company employees.

An outdoor ad campaign by Facebook strikes a similar note, plastering slogans like “Fake news is not your friend” at bus stops around the country.

But the reality of what’s happening on the Facebook platform belies its gauzy public relations campaign.

Last week CNN’s Oliver Darcy asked John Hegeman, the head of Facebook’s News Feed, why the company was continuing to host a large page for InfoWars, a fake news site that traffics in repulsive conspiracy theories. Alex Jones, who runs the site, memorably claimed that the victims of the Sandy Hook mass shooting were child actors.

Hegeman did not have a compelling answer. “I think part of the fundamental thing here is that we created Facebook to be a place where different people can have a voice. And different publishers have very different points of view,” Hegeman said.

Claiming the Newtown massacre is a hoax is not a point of view. It’s a disgusting lie – but a lie that, apparently, Facebook does not see as out of bounds.

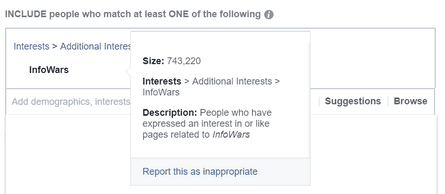

Facebook does not just tolerate Infowars. It seeks to profit from Infowars and its audience. Facebook’s advertising tools, at time of writing, allow advertisers to pay Facebook to target the 743,220 users who “like” the InfoWars page.

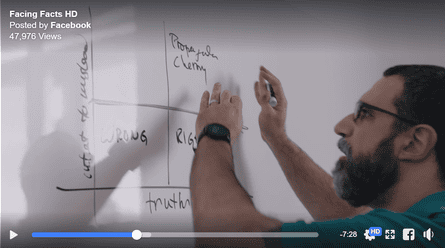

In the Facebook documentary, Eduardo Ariño de la Rubia, a data science manager at Facebook, provides more insight on what kind of content the company believes is unacceptable. De la Rubia says Facebook looks at content along two metrics, “truth” and “intent to mislead”.

De la Rubia draws a simple chart with “truth” on the x-axis and “intent to mislead” on y-axis, creating four quadrants. Only information in the upper left of the chart, low on “truth” and high on “intent to mislead”, should be purged from Facebook, he says. (Without an “intent to mislead”, De la Rubia says, it’s just being “wrong on the internet”.)

De la Rubia offered “Pizzagate”, the hoax that claimed prominent Democrats were running a child sex trafficking ring out of a basement of a DC pizza parlor, as an example of the kind of unacceptable content that would fall into the upper-left quadrant.

That conspiracy was promoted by none other than Infowars’ Alex Jones. (Jones apologized after a man entered the pizza shop and opened fire.)

The problem with Facebook’s strategy seems to be less their theoretical framework than their practical refusal to place almost anything into the upper-left quadrant. Zuckerberg placed his deeply flawed approach in stark relief during an interview with Recode’s Kara Swisher on Wednesday.

Interrupting Swisher, Zuckerberg volunteered that he found Holocaust denial “deeply offensive” but would not ban Holocaust deniers from Facebook because it’s “hard to impugn intent and to understand the intent”.

Zuckerberg’s position echos the company’s promotional video. “There is a lot of content in the gray area. Most of it probably exists where people are presenting the facts as they see them,” Tessa Lyons, Facebook’s project manager for News Feed integrity, says to the camera.

This is where Facebook’s approach completely breaks down. If Zuckerberg is willing to give Holocaust deniers the benefit of the doubt – and therefore keep them on the Facebook platform – it’s clear that Facebook’s pledge to eliminate misinformation is itself fake news.

Zuckerberg, facing an avalanche of criticism, later released a statement saying he “absolutely didn’t intend to defend the intent of people who deny” the Holocaust. He did not, however, back away from his core position – that Holocaust deniers have a place on Facebook.

Facebook is trying to have it both ways. The company is actively seeking credit for fighting misinformation and fake news. At the same time, its CEO is explicitly saying that information he acknowledges is fake should be distributed by Facebook.

Zuckerberg seems to believe that technology will get the company out of this jam. “We have to do everything in the form of machine learning,” Henry Silverman, an operations specialist for News Feed integrity, says earnestly in Facebook’s documentary about fighting fake news.

The solution, in Zuckerberg’s view, appears to be to allow virtually anything to be posted but then drowning it out with heaps of baby pictures. In his statement clarifying his comments on Holocaust deniers, Zuckerberg said that verifiably false information would “lose the vast majority of its distribution in News Feed”, adding that he believed “the best way to fight offensive bad speech is with good speech”.

But the idea that people will be willing to tolerate Holocaust deniers on Facebook if those posts reach a few less people ignores the moral component of these decisions. There is no algorithm for human decency.

- Judd Legum writes Popular Information, a political newsletter